The Curmudgeon's Fallacy

The belief that any preventive measure used to minimize risk of a catastrophe will be offset by increased human fecklessness. Put another way, the curmudgeon's fallacy maintains that items such as safety equipment or regulations will have almost no net impact on safety or health, since people will simply become more feckless. The curmudgeon's fallacy actually may be applied more broadly; it is not restricted to measures intended to improve safety and health. Essentially, the curmudgeon's fallacy applies to any social goal whatever.

WEAK FORM

The weak form of the curmudgeon's fallacy is (being the weak form) less of a fallacy. It holds that constraints and safety measures imposed externally (such as traffic safety laws) will have little or no net impact on safety, since people will merely assume they are protected. Similarly, people with health insurance will be more careless with their health, people with airbags will drive more carelessly, people with legal protections against fraud or false advertising will be more easily duped.

In this sense, the assumption is not so much a fallacy when it is understood as a critique of policy measures. It is valid to say that people might respond to a protective measure by being less cautious about that particular risk. In some cases, such as unwanted pregnancies, it's probably true that massively relaxed social sanctions against extramarital pregnancies have indeed increased their frequency. However, this involves a confusion of changing consequences with changing motivations. Today, few people believe extramarital pregnancies are so awful that it would be sensible to execute unwed mothers. More on that, below.

STRONG FORM

In its strong form, the curmudgeon fallacy believes measures taken by oneself to prevent a catastrophe are as futile as those imposed from outside. For example, when I was younger I used to find healthy-living enthusiasts utterly tiresome and silly, and (privately) ridiculed the fact that they were usually sickly, joyless people. After decades of living and observing, I understand that people usually take up such lifestyles, as I did, in response to specific problems: changing metabolisms, risks of heart disease, incipient obesity, and so forth. Usually, people with congenital health problems run into this concern immediately. I would wait until I looked in the mirror and gagged at what I saw.

The strong-form curmudgeon's fallacy is more related to a contempt for the illusion of effective action. Eating greasy hamburgers with a mountain of fries and a milkshake is actually a very pleasant activity; it's natural to resent the voice that tells one to switch to rice crackers and steamed asparagus. It's natural to sneer that we're all going to die anyway. However, this is another fallacy: that a result is inconsequential if it doesn't last forever. Murder merely hastens the inevitable, but we still regard it as a horrible crime. Democratic institutions are going to disintegrate into despotic ones, some day, but that doesn't mean they're worthless while they last.

More directly, the strong-form curmudgeon's fallacy maintains that self-imposed safety restraints are really a failure to accept the weak-form version of the fallacy. To illustrate this point, suppose somebody reads an article that says that using sunscreen greatly reduces the risk of skin cancer. So she starts wearing the proper SPF sunscreen whenever she's outdoors. In effect, she's acting as if there was some law that required her to do this, even though no such law exists and would be extremely difficult to enforce anyway. She's internalized the expert advice. We assume here that she will abandon alternative precautions, like avoiding exposure to direct sunshine altogether. The strong form appears to reflect a global assumption that new precautions, such as use of sunscreen or cars with airbags, does not reflect caution by the adopter, but displaces caution--even when the person taking the precaution is doing so precisely because she is cautious.

MATHEMATICAL FORM

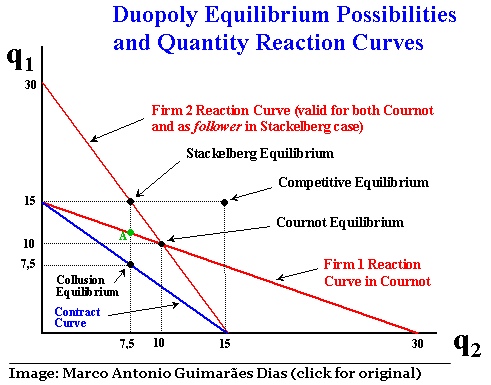

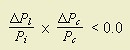

The curmudgeon's fallacy can be expressed in the language of mathematics. Let Pd be the probability of a disaster (e.g., a fatal auto accident). Pc is the probability of a crisis occurring, such as a car accident (which may or may not be fatal); Pl is the probability of that crisis being lethal.

According to the curmudgeon's fallacy, it makes no difference if it is Pc or Pl that is consciously altered; if a legal measure were contrived to reduce Pc instead, causing accidents to be less likely, then accidents would become more lethal. Public policy will invariably increase Pd. At the very least, if by some miracle, the number of fatalities per passenger is demonstrably reduced, then some other awful thing must have happened.

In some cases, it is true that unintended consequences do indeed have the opposite sign and sometimes they do exceed the intended effect. Moreover, as the curmudgeon is the first to point out, there are orthogonal consequences as well. Too many regulations will interfere with each other or suppress productive activity. Safety regulations often do have perverse incentives on behavior or personal health choices. This has led to the introduction of game theory to the analysis of public policy. However, it is most rash to insist that it's always the case. This is why the expectation of large countervailing consequences is a good critique but a poor ideology.

INCIDENCE OF THE FALLACY

Typically, when conservative older men congregate, examples of the curmudgeon's fallacy tend to receive a cordial hearing. The common myth is that airbags tended to make drivers so much more careless that they offset the increment in safety (example). Of course, the authors use the example of the seatbelt:

This surprising result has triggered a number of studies, most of which have come to similar conclusions. In fact, no jurisdiction that has passed a seat belt law has shown evidence of a reduction in road accident deaths. To explore this odd but highly robust finding, experimenters asked volunteers to drive five horsepower go-karts with and without seat belts. They found that those wearing seat belts drove their karts faster. While this does not prove that car drivers do the same, it points in that direction.Of course, if the share of motorists wearing seatbelts increases sharply, the share of motorists killed in accidents wearing seatbelts will also increase, simply because there will always be accidents that would have killed the motorist anyway. Likewise, the author cites a test that shows that motorists responded to wearing seatbelts by driving faster, braking later, and following other vehicles more closely. The author cites no actual study, which leads me to suspect the auto industry commissioned these studies (since the auto industry has long opposed any form of health or safety regulation of its products).

A similar study was done with real drivers on public roads. When subjects who normally did not wear seat belts were asked to do so, they were observed to drive faster, followed more closely, and braked later. Statistics from the United States indicate that as more and more states required seat belt use, the percentage of drivers and passengers killed in their seat belts increased.

The cliche that seat belts save lives is true in the lab and on paper, and it's true if driver behavior does not change. But behavior does change.

A careful reading of the literature reveals that the author is assuming his readers have a poor understanding of statistical inference. In order to test something like homeostatic responses to safety regulations, one has to use regression analysis with hypothesis testing to confirm or deny the null hypothesis (viz., that seatbelts have no effect on driving behavior). The study involved can then use a Wald Test on that particular null hypothesis, which means they can confirm that while safety equipment has a low predictive value on behavior, it may be part of a battery of predictive factors that do.

Yet, elsewhere, where the author cites a study (?) that fails to prove that seatbelts had a year-on-year reduction of traffic fatalities, the null hypothesis would be that seatbelt laws had no detectable effect on traffic fatalities. Since a law usually has a slow impact on behavior, this is an inevitable result. Over a period of four years, the p-value (i.e., the robustness of the coefficient) for seatbelt laws on traffic fatalities would necessarily be quite small.

However, law enforcement officials, private sector insurance carriers, and medical personnel at hospitals reliably warn motorists to wear seatbelts. From year to year, there is a distinct downward trend in traffic fatalities per passenger mile; this is slightly surprising given increases in road congestion, especially at hours late into the night. Cars have undergone numerous waves of safety-enhancing technology modifications besides seatbelts; these modifications have been adopted in many countries, reflecting agreement across ideological regimes. Additionally, there are long-term changes in attitudes about pedestrians that have nothing to do with increased motorist protection and everything to do with suburbanization of the population.

In other words, the author of the article inserted very different standards for testing behavioral homeostasis and for testing regulatory effectiveness; the standard for homeostasis could be set very low, perhaps by allowing a Wald Test; whereas, for regulatory effectiveness, the standard of rigor was markedly lower—the p-value of laws had to be less than 0.05, a nearly impossible standard in public policy research. Laws have a lagged effect on behavior, and auto safety is very complex; since any hypothesis testing may have included autocorrelation effects, dummy variables for many other explanatory variables (like jurisdictions), and a counting parameter for the passage of time, it's almost inevitable that the coefficient on seatbelt laws could be reduced to something quite small.

Finally, the essay leaves open the question, was the unintended consequence greater than the intended effect? Since the tendency has been for traffic fatalities to go down, and since private sector initiatives as well as governmental ones work the same way, it seems clear that the curmudgeon's premise has failed. Traffic regulations may have made drivers more dangerous, but the increased recklessness of modern drivers combined with greater congestion, has not sufficed to offset the aggregate effect of safety equipment and traffic regulations. Even the essay cited had to insert the weasel words, "fatality rates do not decrease as expected." They decreased, but he has some straw man out there of what was "expected" by activists.

Environmental regulations often come under attack as well; for example, using the flimsiest empirical foundations of all (the case of one [1] listed species), one of the posts at Freakonomics claims that the Endangered Species Act incentivizes property owners to destroy all of the listed specimens on their property lest development on their property be restricted. Of course, that's about all there is to Freakanomics: arguments that any public choice will have countervailing effects, QED, public choice is always bad and must be dismissed in all times and all places.

CONCLUSION: A PHILOSOPHICAL ASIDE

I never dared to be radical when young for fear it would make me conservative when old.—"Precaution," Robert Frost 1936The curmudgeon's fallacy is that paradox of paradoxes: a precaution against precaution. A man I know well and much respect was addicted to the fallacy and used it reliably, since he was so well-endowed with natural caution and a singularly robust constitution. Once I mentioned how scandalized I was, reading Adam Smith's Wealth of Nations, about the stupendous infant mortality of 18th century Europe. He replied that he was not so sure infant mortality was such a bad thing, and insisted that I explain why I thought it was. It was a curious quirk of his character that his ideology had no part in his behavior, and he was of all men one of the most tender and generous, even though such brutal ideas flourished in his head.

At the back of the curmudgeon's fallacy is a sense that the lifelong struggle to preserve life has been a fool's errand; and in the waning years, as excellence seems to fade, there's a regret that no distinction was made between fit life and unworthy life. The curmudgeon usually lacks the will and barbarism to follow this through; but he also develops an imbalanced preference for his own "gut" prejudices over the research and formal testing of experts. At the back of this mistrust is a sense that the professional pursuit of safety, peace, and prosperity has merely spawned weakness and dependency, and that what the world really needs is a good, long epidemic to weed out the weak. As for me—I am not Lycurgus, nor was meant to be.

Labels: risk, semantics, statistics