EU Research versus the US variety

This is a post about developing trends, not about the two forming rival blocs. From a comment at Europhobia (Jeff) comes this article in Red Herring (link dead) on the comparative prospects for research in the US and the UE. My own view is that the USA is suffering from market fundamentalism, which means it cannot implement a sound industrial policy (and retain manufacturing jobs) and from sectarian fundamentalism (“Christian” identity politics) which makes it impossible to cultivate future generations of scientists from the ranks of younger children. Here’s a post at Chris Mooney’s site that I agree with; it’s very short as well; here’s “The Flight From America” (via Rue a Nation). However, I do like to read alternative opinions, so let’s have a look at "Can Europe Survive?"

Red Herring: Mr. Martikainen, a Finn, started developing a router—hardware that directs streams of data from one computer to another—back in 1982 at VTT, a research institute in Espoo, Finland. The Finnish companies financing the research, including Nokia, didn’t see the potential, so the project was dropped in 1986, shortly before an American startup called Cisco commercialized similar technology. Cisco went on to dominate basic corporate networking gear, with annual sales of more than $23 billion. Mr. Martikainen today works as a professor and researcher; his prototype gathers dust in a university displayThey have three such examples, then claim this illustrates a trend. However, there are literally scores of famous American concepts, like fuzzy logic, which could not flourish in US soil. There is no universally correct technical model for implementing technology.

Europe has always produced great science and technology. It just hasn’t been very good at commercializing it. Disruptive technologies like GSM—now the most widely adopted mobile technology in the world—and Linux, open-source software that is arguably the biggest threat that Microsoft has had to face to date, were invented in Europe. So was the web.

By the way, Linux is actually a kernel (by far the most widely used) for the GNU OS. Linux (the kernel) was developed by a large group of volunteers, of whom Linus Torvalds was the predominant one. It's really hard to ascribe a national identity to a program developed by literally thousands of coders working voluntarily around the world, but the GNU project was generally associated with universities in the USA. Linus Torvalds is Finnish. GSM is actually an implementation of TDMA, which is really a generic concept. I'm not trying to deflate EU research here, quite the opposite: I'm just saying that technology doesn't usually have a clear nationality unless you're talking about something so hyper-specific that it has only a few applications.

The reason CERN’s web concept did not become an entrepreneurial triumph is that there was no conceivable business model by which such a format could have made money. HTML, for example, is a fundamental element of the Web; it's the component that Tim Berners-Lee developed. Standards, by their nature, require a complex business plan to make any money at all: give away the standard so it’s propagated; give away a user access, like Acrobat Reader, or FlashPlayer, so people can see stuff that complies with the standard; and sell something that the new medium creates a demand for, like Acrobat Writer, Flash, or CGI applications. That Mr. Berners-Lee couldn’t think of a way to make money off of the HTML standard should come as no surprise. Likewise, the fact that his browser was not a commercial success; neither was Mosaic.

Many American entrepreneurs have gotten rich from building businesses around the Internet. Tim Berners-Lee, the British scientist who worked at the European Particle Physics Laboratory in Geneva (CERN) when he invented the World Wide Web, did not. At least, not until last June, when he was awarded a €1.2-million ($1.5-million) technology prize.

Europe also fell behind in computers in the 1970s, software in the 1980s, and broadband in the 1990s.These are not industries that it is prudent to develop, from the point of view of industrial policy (except for broadband). Computers are capital-intensive industries that offer extremely high levels of risk and concentrated returns. In the USA, there were some early successes that have had little favorable impact on US industry per se: the technology itself was adopted irrespective of where it originated, and the companies that made fortunes, like Apple, IBM, Compaq, and so on had a very transitory phase of wealth creation. Besides, EU member states have never flourished in those industries.

Software is even worse. In the case of MS, about 99% of its immense return on investment has consisted of rents torn from other enterprise, often inflicting an opportunity cost far greater than the revenues captured by MS. As for the other firms—Oracle, Adobe, and the lot—much of the coding was eventually outsourced to other countries. The total number of individuals involved was tiny, and the period in which the USA reaped any sort of competitive advantage as a result of Silicon Valley is actually quite brief. The immense fortunes made there were fetishized by business magazines, but were in reality a disaster for American enterprise generally: venture capital, usually managed by prudent, hard-nosed people, was sucked into a frenzy much like the South Sea Bubble, then dissipated on stupendous waste. The new technology that emerged consists of standard items that can be manufactured anywhere. The profitable business is in proprietary devices like microchips, that are manufactured overseas.

“In the end that did not happen, in part because European research is far too dirigiste, planned top down, and in such attempts at detail, serendipity can play no role,” says Mr. Negroponte. “If you visit the MIT Media Lab, there is considerable chaos and it is highly unstructured. Its interdisciplinary nature—itself hard to do in Europe—guarantees a high degree of innovation.” The Media Europe Lab shut its doors earlier this year.If EU research is dirigiste, that’s a problem having to do with the relationship between states and institutions; MIT Media Lab reflects the dynamics inside an university. A more fair comparison would be the climates within many American research facilities of different provenance to the many in the EU. Again, comparing a lab at MIT where there is chaos, to a totally different entity in the EU that closed, is not merely a cheap shot—it’s comparing apples and oranges. MIT Media Lab is basically an outgrowth of the MIT university culture; Media Lab Europe was a venture with MIT and the Irish government to transplant that same culture into Dublin. With all respect to Prof. Negroponte, he was the founder of MIT Media Lab, and a collaborator in the scheme to recreate it in Dublin; it is reasonable to expect him to try to blame his failure on those stodgy Europeans.

The European sense of entitlement is mentioned, although it is the USA where gigantic accountability-proof payments are made to top management; likewise, the existence of some people who are suspicious of capitalism generally (as opposed to the USA, where ideological conformity is far more strictly enforced), as well as a disjointed, complacent rant against national characters.

The last part of the article is actually not so bad, with acknowledgment that the EU is definitely making changes to facilitate startups and make labor markets flexible. However, the implication is that there is only one way to grow technology, and that way involves startups with socialization of the costs—the model that prevails in the USA. In fact, EU firms innovate at least as much as their American counterparts do, but they use different approaches. Usually, research is done inside existing firms or their Stiftungen structure, not as a startup. Had Red Herring focused on the Republic of Korea, Taiwan, or Japan, then its observations would have been more apt, but more obviously irrelevant. Startups in Japan aren’t impossible, but they’re damn close to impossible. Yet Japanese industry is world-beating. Korea’s staggering lead in consumer electronics makes the USA look like the Flintstones, but it was done through state-chaebol collaboration.

Again, there is no one model. EU member states are optimized for state-large enterprise-university collusion; the USA, for startups, privatization of profits, and socialization of costs; NE Asia, for coordinated division of labor between conglomerates and the finance ministry.

Labels: economics, technology

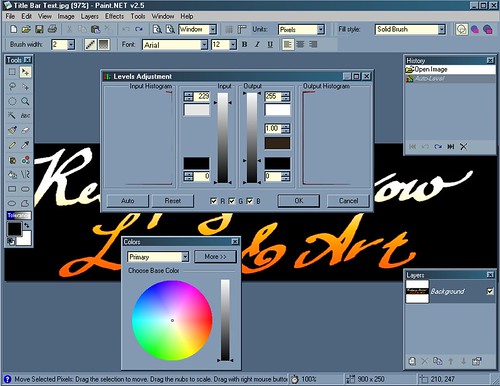

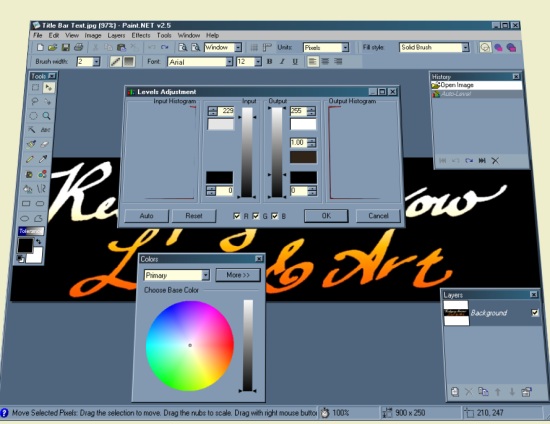

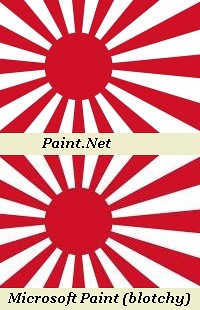

Windows comes bundled with a software called Paint. Click the start button and select programs, then select accessories. It should be there along with the DOS shell and Wordpad. If you've used a lot, as I have, you know it has some serious limitations. The worst limitation is the way it saves JPEGs. Basically, if you open a bitmap file and save it as a JPEG, it looks like—er, uh, it looks terrible. Suppose the bitmap is the Japanese naval ensign. In BMP, this is a circle of solid red on a field of solid white. Save it as a JPEG, and there's a mist of tiny reddish ripples leaking into the white. Immaculate faces look like they suffer severe acne or scarring.

Windows comes bundled with a software called Paint. Click the start button and select programs, then select accessories. It should be there along with the DOS shell and Wordpad. If you've used a lot, as I have, you know it has some serious limitations. The worst limitation is the way it saves JPEGs. Basically, if you open a bitmap file and save it as a JPEG, it looks like—er, uh, it looks terrible. Suppose the bitmap is the Japanese naval ensign. In BMP, this is a circle of solid red on a field of solid white. Save it as a JPEG, and there's a mist of tiny reddish ripples leaking into the white. Immaculate faces look like they suffer severe acne or scarring.